How to Scrape Alibaba Product Data and Seller Information

How to scrape Alibaba data with the help of

the most widely used open-source

framework for data mining - Scrapy.

Ask DataOx experts.

Ask us to scrape the website and receive free data sample in XLSX, CSV, JSON or Google Sheet in 3 days

Scraping is the our field of expertise: we completed more than 800 scraping projects (including protected resources)

Table of contents

Estimated reading time: 5 minutes

Introduction

When you collect product data from huge e-commerce websites like Alibaba you get a great opportunity to do comprehensive competitive research, market analysis, and price comparison. It is one of the leading e-commerce portals with an enormous product catalog.

However, extracting the required Alibaba data is a real challenge if you are not familiar with web scraping. But if you know the stuff and have some coding skills go through this article to find out how to extract Alibaba products’ data through Scrapy - one of the most widely used open-source frameworks for web scraping.

3 Reasons to Scrape Alibaba

Data extracted from e-commerce websites is a potential help to businesses that are in e-commerce and not only. Keep reading to learn three main reasons why you need to scrape data from Alibaba.

Cataloging and listing

For any e-commerce business listing and cataloging competitors’ products are the most important thing. Without an up-to-date and comprehensive product list, it is impossible to compete in the e-commerce market. So, using Alibaba extractor, you can easily get Alibaba info and build your own product list based on your target audience’s demands and preferences or even create a new category of products.

Analyzing data

To do complete market research companies strive to get insights from the buyers’ feedbacks like ratings and reviews. This user-generated content will give you a clear sign of a particular product or brand. This kind of data might be used to improving your current products or offer a new one as well as build a positive brand reputation.

Comparing prices

Today Alibaba is well known for its affordable prices, that’s why it is crucial to extract its prices for further price comparison and optimization. Almost all e-commerce users are tracking product prices, and Alibaba may be the most popular source to track in the first turn. So, if you want to know prices in the market to optimize your price strategy, start with Alibaba scraping!

How to Create an Alibaba Crawler

Written in Python, Scrapy is one of the most efficient free frameworks for web scraping that enables users to extract, manage, and store information in a structured data format. It is perfectly adapted for web crawlers extracting details from various pages. Let’s move forward to learn how to scrape data from the leading marketplace.

Scrape Alibaba – Getting started

To create an Alibaba crawler you need to have Python 3 and PIP. Follow the links to download them:

To install the necessary packages, the following command is used:

Creating Alibaba Scrapy project

The next step is to create a Scrapy project for Alibaba with the scrapy_alibaba folder name containing all necessary files. The command is the following:

Creating the crawler

There is a built-in command in Scrapy called genspider that is responsible for generating the primary crawling template.

To generate our crawler that will create spiders/scrapy_alibaba.py file it should be:

The complete code should look like:

Extracting Product Data from Alibaba

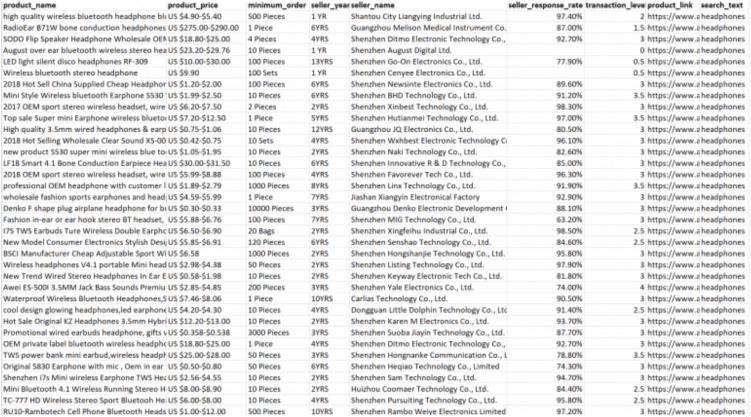

In this example, we’re going to extract the following fields for the earphones:

- Name of the product

- Price

- Image

- Link to the product

- Minimum number of orders

- Name of the seller

- The response rate of the seller

- Number of years as a seller on Alibaba

To extract the required data from Alibaba we’re going through the following 3 steps:

- Create a Selectorlib library

- Create a keyword file

- Export data in the required format

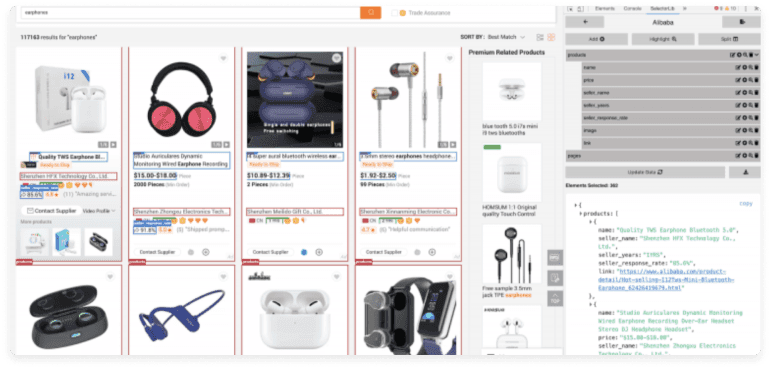

Creating a Selectorlib pattern for Alibaba

Selectorlib is a Chrome extension enabling users to point out the required data and create CSS Selectors or XPaths to extract that data. To learn more about Selectorlib go to the following link. Below you may find how we point out the fields in the code for the required data we need to extract from Alibaba using Selectorlib.

When you marked all the required data, click on the Export button to download the YAML file and save it as search_results.yml in the folder named /resources.

Reading keywords

Now we’re going to set up the Alibaba crawler to read specific keywords from a certain file placed in the folder /resources. Let’s create there a CSV file named keywords.csv and use Python’s CSV module to read our keywords file.

Exporting data into CSV or JSON

With Scrapy you can have in-built JSON and CSV formats. To save the extracted data in the desired format just use the appropriate command line

The output will be saved in the same folder as the script. Here is an example of extracted data in CSV

The full code for our Alibaba crawler is given below (code source):

How to Scrape Alibaba – Questions and Answers

How to scrape Alibaba?

The best way to scrape Alibaba and its eCommerce projects like Aliexpress is using Python-based crawlers like Scrapy or a ProxyCrawl platform for anonymous web scraping. They can help you get product names, costs, images, and seller information. For complex data extraction, you are welcome to consult DataOX experts.

How to scrape data from Alibaba to find the best suppliers?

Several factors can evaluate Alibaba suppliers, such as product prices, shipping costs, and customer reviews. Moreover, you can filter out the products created by Gold Suppliers with Trade Assurance. These two filters are available in the Supplier Type section, which can be accessed via API during the web scraping process.

How to scrape Aliexpress sellers?

Each Alibaba seller has its own page with a product list. To scrape the data, you must first open the seller page URL. Usual scripts won’t work for multiple products because all pages have Load More buttons. The best option is to use a web scraper that works with Load more, hovers, and pagination, or hire professional developers.

Final Thoughts

To sum up, we can state that creating the Alibaba crawler is not an easy task. So, if you make up your mind to outsource Alibaba product data extraction to a dedicated web scraping service, a provider like DataOx will free you of the complications in web crawling.

Schedule a free consultation with our expert to reveal the whole list of our web scraping services and learn how DataOx can help you to scrape Alibaba data on a large scale.

Publishing date: Sun Apr 23 2023

Last update date: Sun Aug 20 2023