How to Scrape Glassdoor with Selenium and BeautifulSoup

Read DataOx's Comprehensive Guide for Efficient

Data Collection from Glassdoor with and without coding.

Contact our experts to learn more.

Ask us to scrape the website and receive free data sample in XLSX, CSV, JSON or Google Sheet in 3 days

Scraping is the our field of expertise: we completed more than 800 scraping projects (including protected resources)

Table of contents

Estimated reading time: 10 minutes

Introduction

Glassdoor is a popular website that allows users to search for jobs, research companies, and read reviews from current and former employees.

As a data scientist or analyst, you can scrape data from Glassdoor for various purposes, such as conducting sentiment analysis on employee reviews or collecting salary data for specific industries or companies. In this article, you will learn how to scrape Glassdoor website, what you can extract, and how to do it right.

What is Glassdoor?

Glassdoor is the ultimate insider's guide to the world of work. It is a robust platform connecting job seekers with companies and employers, providing unparalleled transparency into what it's like to work at a particular organization. Therefore, with its extensive database of company reviews, salary reports, and interview questions, Glassdoor provides job seekers with an inside look at the culture, values, and benefits of companies across various industries and sectors.

But Glassdoor is much more than just a job search engine. It’s a community of millions of professionals sharing their experiences and knowledge to help each other succeed in their careers. From expert career advice and tips to exclusive company reviews and ratings, Glassdoor is a one-stop shop for all your career needs.

Glassdoor is a valuable resource for businesses and researchers, providing access to employee reviews, salaries, job postings, and more. However, manually extracting this data can be a time-consuming and tedious process. You can automate data collection and analysis using web scraping techniques, saving time and improving accuracy.

Which Data Can Be Extracted By Scraping The Glassdoor?

Scraping any website is not the most trivial task due to a large amount of data and the site’s security measures, so you must be clear about precisely what data you need.

Glassdoor website can provide access to a wide range of data, including:

- Company information: Glassdoor provides detailed company information, including company size, location, industry, and revenue.

- Job listings: Glassdoor is a popular platform for job seekers, and scraping can provide access to thousands of job listings across various industries and sectors.

- Salary and compensation data: Valuable salary and compensation data, including average salaries for different job titles and details about benefits and perks.

- Company reviews and ratings: Glassdoor allows employees and former employees to leave reviews and ratings of companies, providing valuable insights into company culture, leadership, and employee satisfaction.

- Interview questions and tips: Glassdoor also provides information about interview questions and advice, allowing job seekers to prepare better for the interview process.

- Industry trends and insights: With access to Glassdoor's vast database of job listings and reviews, scraping can provide insights into industry trends, changes, and predictions for future growth and demand.

What Do You Need To Scrape The Glassdoor?

To scrape the Glassdoor website, you will typically need a few essential tools and resources:

Web Scraping Software

A web scraping software program can help you automate collecting data from Glassdoor. There are many different web scraping tools available, both free and paid, that can help you scrape Glassdoor and other websites.

Proxy Server

A proxy server can help you avoid being blocked by Glassdoor or other websites during scraping. A proxy server allows you to route your web traffic through a different IP address, making detecting and blocking your scraping activities harder for websites.

Data Storage and Management

As you scrape Glassdoor, you will accumulate a large amount of data that needs to be stored and managed. You will need a database or other storage solution that can handle large amounts of data and allow you to quickly search, sort, and analyze the data you collect.

Programming Skills (optional)

While many web scraping tools allow you to scrape websites without writing any code, having some programming skills can help customize your tool and deal with any issues that arise during the scraping process. Next, we will look at several ways to scrape Glassdoor, with and without programming knowledge.

Knowledge of Web Scraping Laws and Ethics

It’s essential to be aware of the legal and ethical considerations surrounding web scraping, including data privacy laws and website terms of use. Violating these laws and terms can result in legal action and damage your reputation. You can also read the Terms of Use on the Glassdoor website.

How To Scrape A Glassdoor Without Coding?

Scraping a Glassdoor website without coding knowledge can be a challenging task. However, some online tools can help you scrape the data without any coding knowledge. Here are some steps to follow:

- Search for a web scraping tool. Several web scraping tools are available online, such as Octoparse, Parsehub, and WebHarvy. These tools allow you to scrape data from a website without any coding knowledge. Check the offers of each of the services or choose a brand you already know.

- Create an account. Once you have selected a web scraping tool, create an account on their website. Some tools offer a free trial, while others require a subscription. The registration process is unlikely to cause you difficulties.

- Select the data. Navigate to the Glassdoor website and select the data you want to scrape. In one of the blocks above, you can find out precisely what data can be pulled from Glassdoor and how to manage it.

- Set up the web scraping tool. Once you have selected the data, you want to scrape, open the web scraping tool and set up the data extraction process. This usually involves choosing the website elements you want to scrape and defining the scraping rules.

- Run the scraper. After you have set up the web scraping tool, run the scraper to extract the data from the Glassdoor website. The extracted data will be saved in a format easily exported to Excel or CSV.

- Export the data. Once the scraper has finished running, export the data to Excel or CSV format. You can then analyze and use the data for your research or analysis.

As you can see, many scraping services on the web can do all the hard work for you. You have to choose the right one.

How To Scrape A Glassdoor With Coding?

With programming knowledge, you will not have to deal with intermediaries (not considering the technical nuances like a proxy server) and pay for something you can create yourself. Scraping a Glassdoor website using various programming languages and libraries, such as Python, Beautiful Soup, and Selenium.

Here are some steps to follow:

- Install the necessary software. To start, you must install Python and the necessary libraries, such as BeautifulSoup and Selenium. You can install these libraries using pip, a package manager for Python.

- Understand the website structure. Before you start scraping, you need to understand the structure of the Glassdoor website. This involves identifying the HTML tags and attributes that contain the data you want to scrape.

- Write the code. Once you understand the website structure, you can start writing the code. This typically involves using libraries like BeautifulSoup to parse the HTML code and extract the relevant data. If you need to interact with the website, you may also need to use a library like Selenium to automate user interactions.

- Test the code. After writing the code, test it on a small sample of data to ensure it works correctly. This will also help you identify any errors or bugs in the code.

- Scale up the scraping. Once you have tested the code, you can scale up the scraping to extract data from the entire website. This may involve using loops and other programming constructs to automate the scraping process.

- Store the data. After scraping the data, store it in a format that is easy to analyze, such as CSV or JSON. You can also store the data in a database for easier management and analysis.

Next, you will see some code examples for BeautifulSoup and Selenium.

Scrape Glassdoor using BeautifulSoup

Here is a detailed guide on how to scrape Glassdoor using Beautiful Soup:

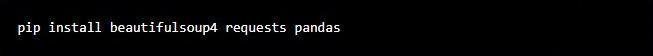

1. First, make sure you have the required libraries installed. You will need Beautiful Soup, requests, and pandas. You can install these using the following command:

pip install beautifulsoup4 requests pandas

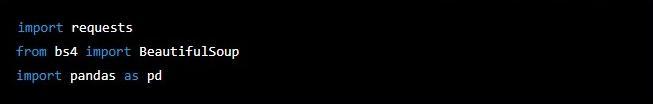

2. Next, import the libraries into your Python script:

import requests

from bs4 import BeautifulSoup

import pandas as pd

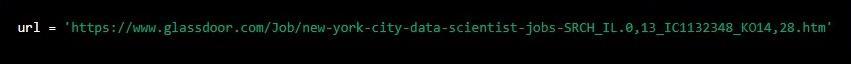

3. Define the URL of the Glassdoor page you want to scrape. For example, if you want to scrape job listings in New York City for the keyword “data scientist,” you could use the following URL:

url = ‘https://www.glassdoor.com/Job/new-york-city-data-scientist-jobs-SRCH_IL.0,13_IC1132348_KO14,28.htm’

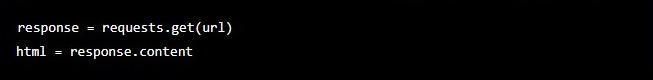

4. Use the requests library to retrieve the HTML content of the page:

response = requests.get(url)

html = response.content

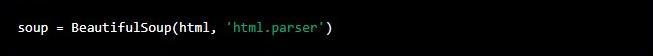

5. Use Beautiful Soup to parse the HTML content:

soup = BeautifulSoup(html, ‘html.parser’)

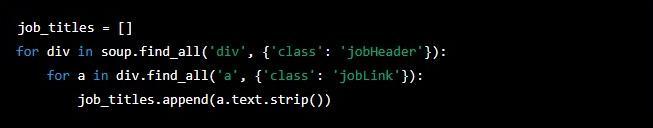

6. Use the Chrome Developer Tools or a similar tool to inspect the page and identify the HTML tags and classes that contain the data you want to scrape. For example, if you want to scrape the job titles, you could use the following code:

job_titles = []

for div in soup.find_all(‘div’, {‘class’: ‘jobHeader’}):

for a in div.find_all(‘a’, {‘class’: ‘jobLink’}):

job_titles.append(a.text.strip())

This code finds all the <div> tags with the class ‘jobHeader’, then finds all the <a> tags with the class ‘jobLink’ within those <div> tags, and extracts the text of the <a> tags. It then adds the text to the job_titles list.

Repeat step 6 for any other data you want to scrape, such as company names, salaries, and job locations.

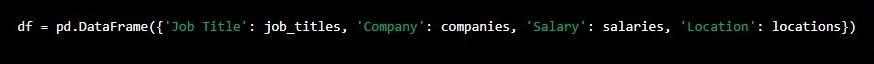

7. Once you have scraped all the data you want, you can store it in a pandas DataFrame:

df = pd.DataFrame({‘Job Title’: job_titles, ‘Company’: companies, ‘Salary’: salaries, ‘Location’: locations})

This code creates a DataFrame with the job titles, companies, salaries, and locations as columns.

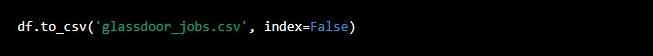

8. You can then save the DataFrame to a CSV file:

df.to_csv(‘glassdoor_jobs.csv’, index=False)

This code saves the DataFrame to a file named ‘glassdoor_jobs.csv’.

Glassdoor Scraper on Selenium

This is a small guide on how to scrape Glassdoor with Selenium.

Prerequisites

Before starting, make sure that you have the following:

- Python 3 installed on your machine.

- Selenium package installed on your machine.

- Chrome or Firefox browser installed on your machine.

- Chrome or Firefox webdriver installed on your machine.

- Glassdoor account.

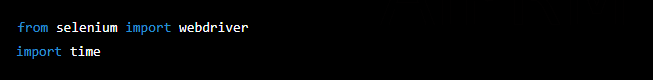

Step 1: Import the Required Libraries

First, you need to import the necessary libraries in our Python script. In this case, you will need the Selenium and time libraries.

from selenium import webdriver

import time

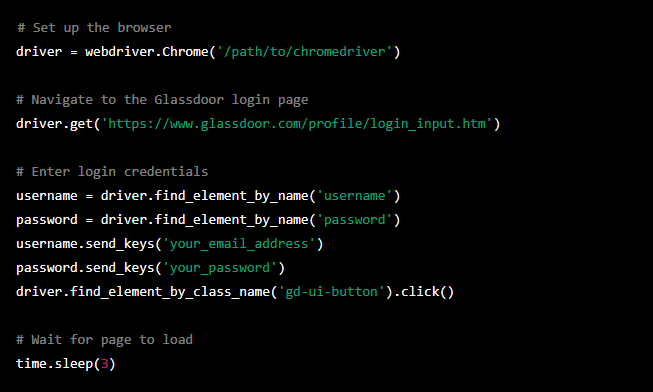

Step 2: Set Up the Browser and Login

Next, set up the browser and log in to Glassdoor. First, instantiate a new instance of the browser using the webdriver module. Then navigate to the Glassdoor login page and enter credentials.

# Set up the browser

driver = webdriver.Chrome(‘/path/to/chromedriver’)

# Navigate to the Glassdoor login page

driver.get(‘https://www.glassdoor.com/profile/login_input.htm’)

# Enter login credentials

username = driver.find_element_by_name(‘username’)

password = driver.find_element_by_name(‘password’)

username.send_keys(‘your_email_address’)

password.send_keys(‘your_password’)

driver.find_element_by_class_name(‘gd-ui-button’).click()

# Wait for page to load

time.sleep(3)

Note that you will need to replace ‘/path/to/chromedriver’ with the path to your Chrome or Firefox webdriver.

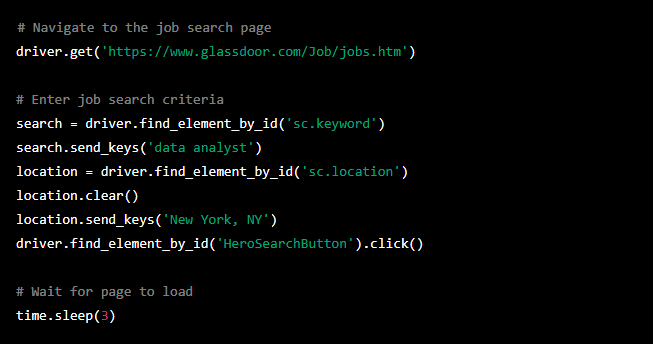

Step 3: Search for Jobs

Once you have logged in, you can search for jobs on Glassdoor. To do this, navigate to the job search page and enter these search criteria.

# Navigate to the job search page

driver.get(‘https://www.glassdoor.com/Job/jobs.htm’)

# Enter job search criteria

search = driver.find_element_by_id(‘sc.keyword’)

search.send_keys(‘data analyst’)

location = driver.find_element_by_id(‘sc.location’)

location.clear()

location.send_keys(‘New York, NY’)

driver.find_element_by_id(‘HeroSearchButton’).click()

# Wait for page to load

time.sleep(3)

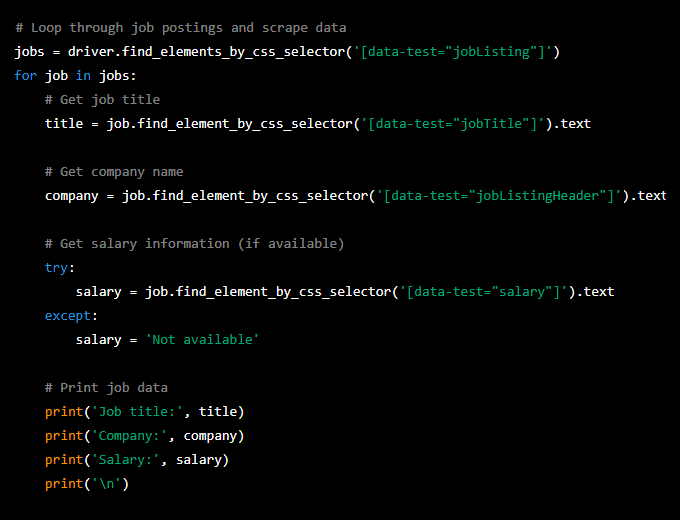

Step 4: Scrape Job Data

Now that you have performed a job search, you can scrape data on the job postings. You need to loop through each job posting on the page and extract the job title, company name, and salary information (if available).

# Loop through job postings and scrape data

jobs = driver.find_elements_by_css_selector(‘[data-test=”jobListing”]’)

for job in jobs:

# Get job title

title = job.find_element_by_css_selector(‘[data-test=”jobTitle”]’).text

# Get company name

company = job.find_element_by_css_selector(‘[data-test=”jobListingHeader”]’).text

# Get salary information (if available)

try:

salary = job.find_element_by_css_selector(‘[data-test=”salary”]’).text

except:

salary = ‘Not available’

# Print job data

print(‘Job title:’, title)

print(‘Company:’, company)

print(‘Salary:’, salary)

print(‘\n’)

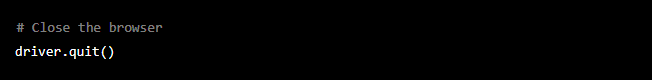

Step 5: Close the Browser

Finally, close the browser to end the session.

# Close the browser

driver.quit()

That’s it! Note that this is just a basic example, and you can modify the script to scrape additional data or perform more complex searches.

Conclusion

So, web scraping Glassdoor can be an efficient and effective way to collect valuable data for businesses and researchers. By following these steps and using the right web scraping tools, you can automate data collection and analysis, saving time and improving accuracy.

Consequently, you have a choice: do the scraping with your own strength and skills, or use ready-made solutions from popular services. Whatever you choose, it is essential to consider that the extracted data's speed, quality, and reliability depend on the experience and the necessary tools.

Would you like to learn more about Glassdoor scraping and benefit from the expertise of our experts?

Contact us for a free consultation.

Publishing date: Sun Apr 23 2023

Last update date: Wed Apr 19 2023